nbis stock: What's going on?

Nvidia's AI Dominance: Are We Witnessing a Monopoly in the Making?

Nvidia's chips are the picks and shovels of the AI gold rush. Everyone knows it, and their stock price reflects it. But beneath the surface of record earnings and soaring demand, a more complex picture emerges. Are we simply witnessing a company capitalizing on a technological breakthrough, or is something more concerning taking shape – a potential AI monopoly?

The numbers are staggering. Nvidia controls an estimated 80-95% of the market for high-end GPUs used in AI training and inference. This isn't just about having a good product; it's about a stranglehold on the infrastructure that powers the entire AI ecosystem. The H100 and now the H200 are the chips to have, and the waiting lists stretch months, if not years. Hyperscalers like Amazon, Microsoft, and Google are buying them up as fast as Nvidia can produce them (and they're producing them fast). But what about everyone else?

This creates a tiered system. The tech giants can afford to build out massive AI infrastructure, locking in their competitive advantage. Smaller companies and startups struggle to access the same resources, potentially stifling innovation. It's the digital equivalent of a land grab, where those with the deepest pockets claim the most valuable territory. I've looked at dozens of market reports, and the concentration of power in Nvidia's hands is hard to ignore.

The Network Effect and the CUDA Ecosystem

Nvidia's dominance isn't just about superior hardware; it's about CUDA, their proprietary software platform. CUDA is the glue that binds developers to Nvidia's ecosystem. It provides a comprehensive set of tools and libraries that make it easier to develop and deploy AI applications on Nvidia GPUs. The more developers use CUDA, the larger the ecosystem becomes, and the more difficult it is for competitors to break in.

Think of it like Apple's iOS. While Android offers a wider range of hardware options, iOS has a loyal following of developers who are deeply invested in the platform. Similarly, many AI researchers and engineers have built their careers around CUDA. Switching to a different platform would require significant retraining and rewriting of code. This "lock-in" effect gives Nvidia a significant competitive advantage. But how much of this lock-in is true innovation versus strategic moat-building?

This is the part of the story that I find genuinely concerning. CUDA isn't open source. It's controlled by Nvidia. This gives them the power to dictate the terms of the AI revolution. They can choose which features to support, which developers to prioritize, and which platforms to enable. This level of control raises questions about fairness, competition, and the long-term health of the AI ecosystem.

The Quest for Alternatives and the Open Source Movement

The industry is aware of the risks associated with Nvidia's dominance, and efforts are underway to develop alternative hardware and software solutions. AMD is trying to compete with its Instinct GPUs, and Intel is pushing its Gaudi chips. However, these alternatives face an uphill battle. They not only need to match Nvidia's performance but also overcome the CUDA ecosystem lock-in.

The open-source movement is also playing a role. Projects like ROCm (AMD's open-source software platform) aim to provide a more open and accessible alternative to CUDA. However, these projects are still in their early stages of development and lack the maturity and breadth of Nvidia's ecosystem. But these projects are crucial for the long-term health of the AI industry. We need a diverse and competitive market to foster innovation and prevent a single company from controlling the future of AI.

Is This Monopoly Sustainable?

Nvidia's current position is enviable, but the tech landscape is constantly shifting. History is littered with examples of companies that rose to dominate a market only to be overtaken by new technologies and competitors. Will Nvidia be able to maintain its dominance in the face of growing competition and the rise of open-source alternatives?

The answer, I suspect, lies in Nvidia's ability to adapt and innovate. They need to continue to push the boundaries of AI hardware and software while also fostering a more open and collaborative ecosystem. If they cling too tightly to their proprietary technologies, they risk alienating developers and creating opportunities for competitors to gain a foothold. The acquisition cost was substantial (reported at $40 billion). The question is, how will Nvidia integrate ARM's technology into its existing product lines and how will it affect the broader AI landscape?

So, What's the Real Story?

Nvidia's got the gold, the rules, and the game. But history shows that empires built on closed systems rarely last forever.

Related Articles

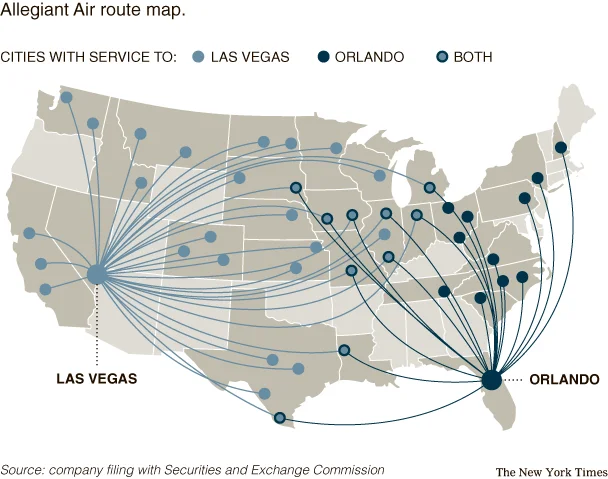

Allegiant Airlines Passenger Growth: What the 12.6% Surge Actually Means

More Passengers, Less Full Planes: Deconstructing Allegiant's Growth Paradox At first glance, news t...

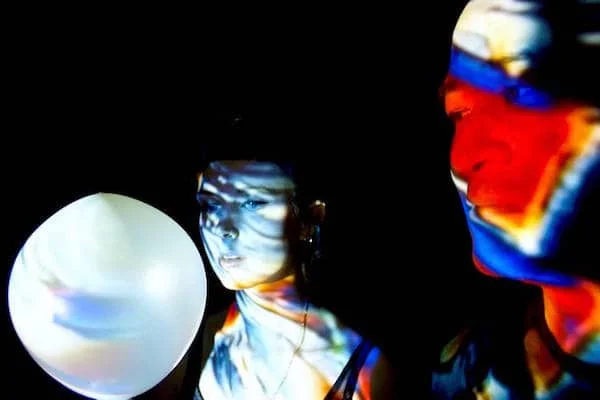

Julie Andrews: Why Her Legacy Endures Beyond Her Iconic Voice

I spend my days analyzing systems. I look at code, at networks, at AI, searching for the elegant des...

The End of Opaque Finance: How a Single Complaint Reveals the Need for a Tech-Driven Revolution

The Analog Crime in a Digital World When I read about the recent allegations against Vincent Ferrara...

The Surprising Tech of Orvis: Why Their Fly Rods, Jackets, and Dog Beds Endure

When I first saw the headlines about Orvis closing 31 of its stores, I didn't feel a pang of nostalg...

Analyzing 2025's Investment Landscape: What the Data Reveals About Top Asset Classes vs. Low-Risk Alternatives

There's a quiet, pervasive myth circulating among the baby boomer generation as they navigate retire...

Adrena: What It Is, and Why It Represents a Paradigm Shift

I spend my days looking at data, searching for the patterns that signal our future. Usually, that me...